Concurrent Programming

concurrency is not just limited to the kernel

The kernal can use concurrency to run multiple process, our applications can also gain benefit from concurrency as well

Accessing slow I/O devices : overlapping useful work with I/O requests

- Interacting with humans : Each time the user requests some action (say, by clicking the mouse), a separate concurrent logical flow is created to perform the action.

- Reducing latency by deferring work :

- Servicing multiple network clients.

- Computing in parallel on multi-core machines

Modern operating systems provide three basic approaches for building concurrent programs:

- Processes

- I/O multiplexing.

- Threads

12.1 Concurrent Programming with Processes

The simplest way to build a concurrent program is with processes

For example, a natural approach for building a concurrent server is to accept client connection requests in the parent and then create a new child process to service each new client.

12.2 Concurrent Programming with I/O Multiplexing

The basic idea is to use the select function to ask the kernel to suspend the process, returning control to the application only after one or more I/O events have occurred

select is a complicated function with many different usage scenarios

1 |

|

12.3 Concurrent Programming with Threads

A thread is a logical flow that runs in the context of a process.

- The threads are scheduled automatically by the kernel.

- Each thread has its own thread context, including a unique integer thread ID (TID), stack, stack pointer, program counter, general-purpose registers, and condition codes

- All threads running in a process share the entire virtual address space of that process.

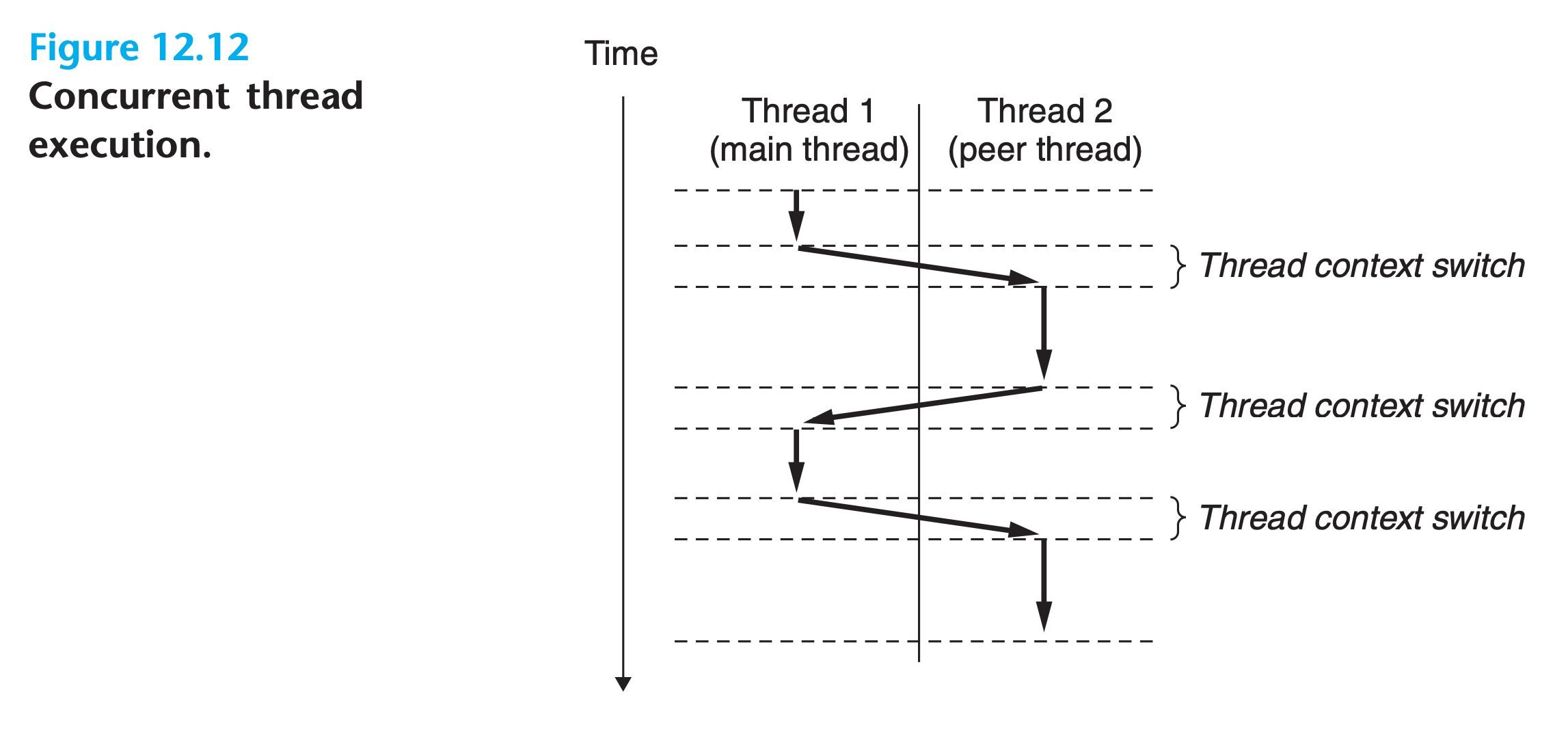

12.3.1 Thread Execution Model

The execution model for multiple threads is similar in some ways to the execution model for multiple processes

Thread execution differs from processes in some important ways.

- Because a thread context is much smaller than a process context, a thread context switch is faster than a process context switch

- Threads are not organized in a parent-child hierarchy. They are organized as a pool of threads !!!

- Independent of which threads were created by which other threads

- The main thread is distinguished from other threads only in the sense that it is always the first thread to run in the process

- A thread can kill any of its peers or wait for any of its peers to terminate.

- Each peer can read and write the same shared data.

12.3.2 Posix Threads

The code and local data for a thread are encapsulated in a thread routine.

- each thread routine takes as input a single generic pointer and returns a generic pointer.

- If you want to pass multiple arguments to a thread routine, then you should put the arguments into a structure and pass a pointer to the structure.(Return is the same)

12.3.3 Creating Threads

1 |

|

The pthread_create function creates a new thread and runs the thread routine f in the context of the new thread and with an input argument of arg

- The

attrargument can be used to change the default attributes of the newly created thread. - When

pthread_createreturns, argumenttidcontains the ID of the newly created thread - The new thread can determine its own thread ID by calling the

pthread_selffunction.

1 |

|

12.3.4 Terminating Threads

A thread terminates in one of the following ways:

- The thread terminates implicitly when its top-level thread routine returns.

- The thread terminates explicitly by calling the

pthread_exitfunction`. If the main thread calls pthread_exit, it waits for all other peer threads to terminate and then terminates the main thread and the entire process with a return value of thread_return. - Some peer thread calls the Linux

exitfunction, which terminates the process and all threads associated with the process. - Another peer thread terminates the current thread by calling the

pthread_ cancelfunction with the ID of the current thread

1 |

|

1 |

|

12.3.5 Reaping Terminated Threads

1 |

|

- The

pthread_joinfunction blocks until thread tid terminates, assigns the generic (void *) pointer returned by the thread routine to the location pointed to bythread_return, and then reaps any memory resources held by the terminated thread.

12.3.6 Detaching Threads

At any point in time, a thread is joinable or detached

- A joinable thread can be reaped and killed by other threads. Its memory resources (such as the stack) are not freed until it is reaped by another thread.

- a detached thread cannot be reaped or killed by other threads. Its memory resources are freed automatically by the system when it terminates.

By default, threads are created joinable.

1 |

|

The pthread_detach function detaches the joinable thread tid

12.3.7 Initializing Threads

The pthread_once function allows you to initialize the state associated with a thread routine.

1 |

|

12.4 Shared Variables in Threaded Programs

12.4.1 Threads Memory Model

registers are never shared, whereas virtual memory is always shared.

These stacks are contained in the stack area of the virtual address space and are usually(Not Always) accessed independently by their respective threads.

Because different thread stacks are not protected from other threads

- if a thread somehow manages to acquire a pointer to another thread’s stack, then it can read and write any part of that stack

12.4.2 Mapping Variables to Memory

Variables in threaded C programs are mapped to virtual memory according to their storage classes:

- Global variables

- At run time, the read/write area of virtual memory contains exactly one instance of each global variable that can be referenced by any thread.

- Local automatic variables

- At run time, each thread’s stack contains its own instances of any local automatic variables

- Local static variables

- As with global variables, the read/write area of virtual memory contains exactly one instance of each local static variable declared in a program

- Each peer thread reads and writes this instance.

Practice Problem 12.6 :white_check_mark:

variable intance referred in main? referred in thread 0? referred in thread 1? ptr Yes Yes Yes cnt No Yes Yes i.m Yes No No msgs.m Yes Yes Yes myid.p0 No Yes No myid.p1 No No Yes

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

char ** ptr ;

void * thread (void * args);

int main(){

long i ;

char * msgs [N] = { "Hello from foo", "Hello from bar" };

ptr = msgs ;

pthread_t tid ;

for (i=0 ; i < N ; i++){

pthread_create(&tid , NULL , &thread , (void*)i );

}

pthread_exit(NULL);

}

void * thread (void * args){

long myid = (long)args;

static int cnt = 0 ;

printf("[%ld] : %s (cnt= %d)\n" , myid , ptr[myid] , ++cnt);

return NULL;

}

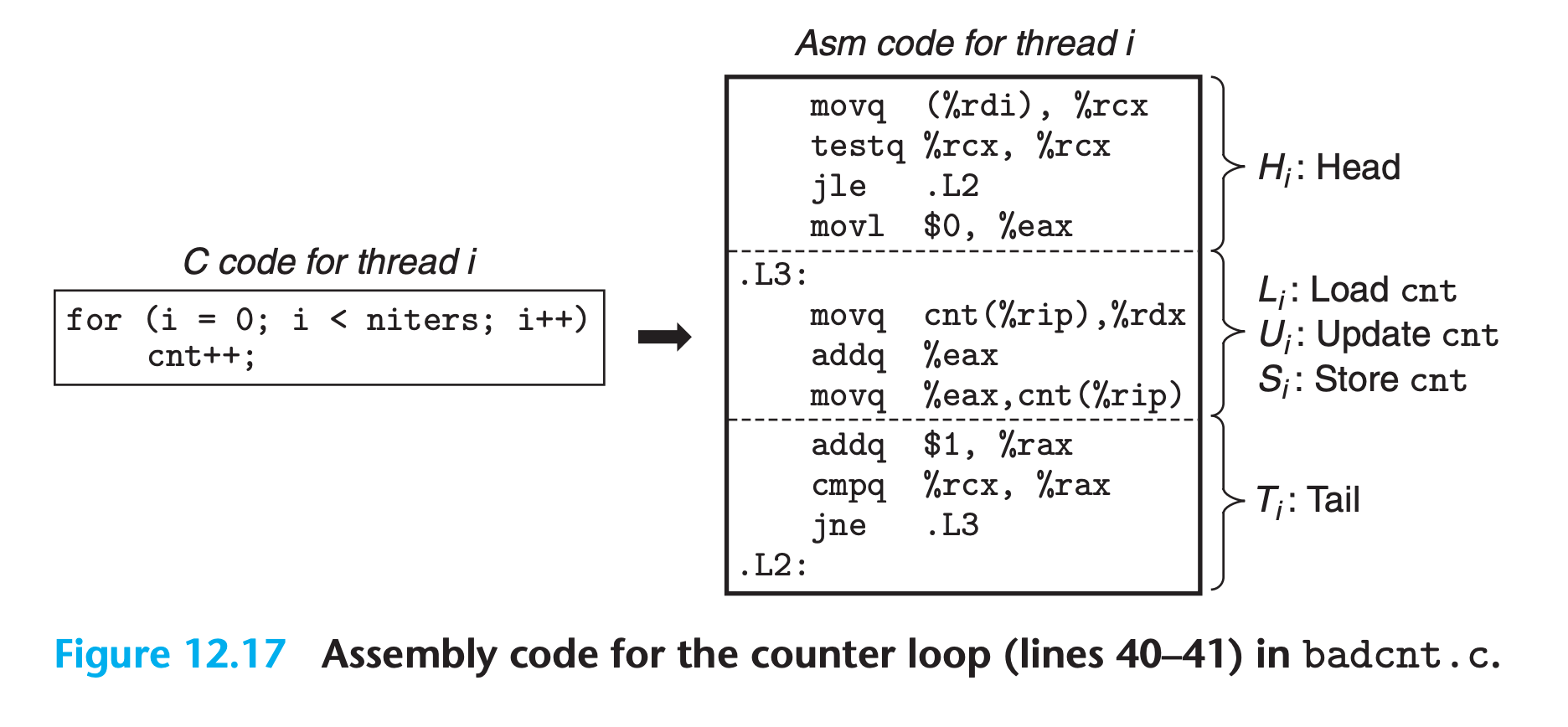

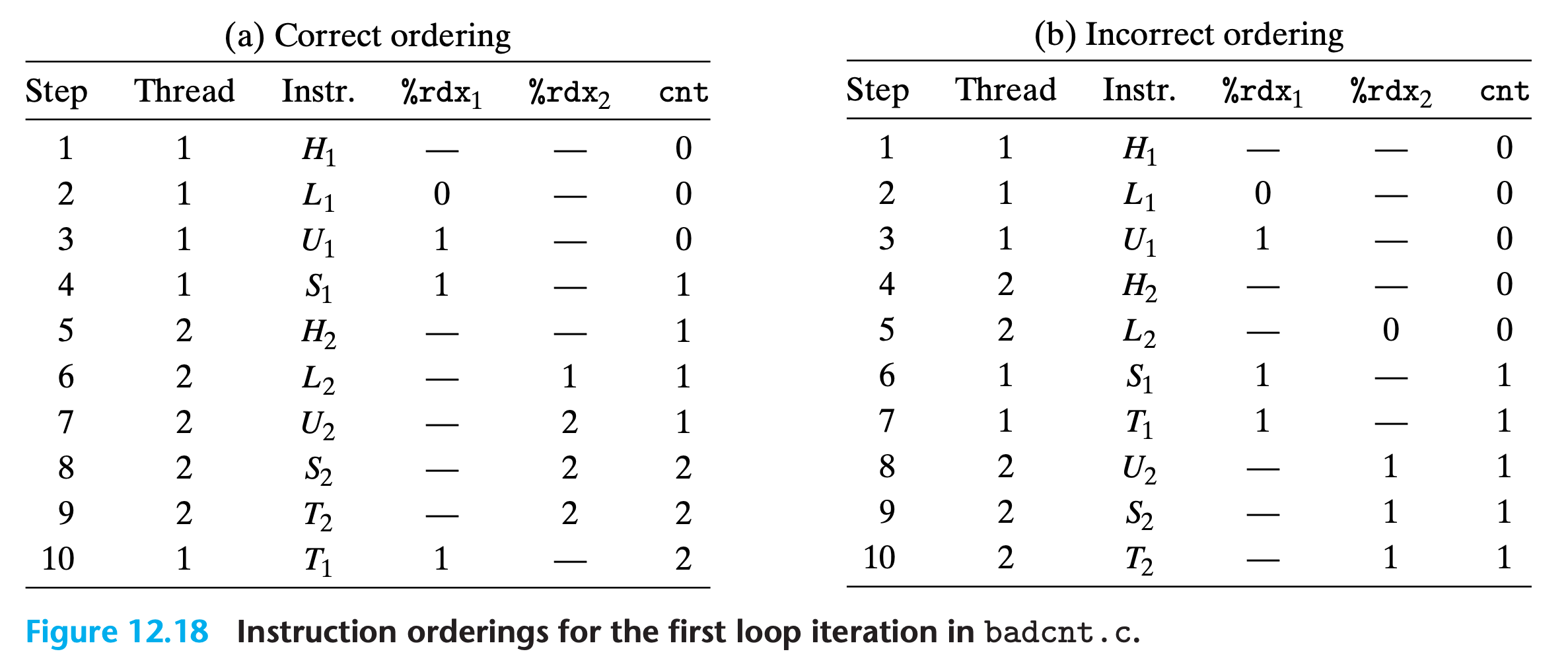

12.5 Synchronizing Threads with Semaphores

Shared variables can be convenient, but they introduce the possibility of nasty synchronization errors.

For example

1 |

|

1 | $ ./bad 200000 |

Why?

Threads may read the unupdated data and increase it !!!

They can even override the correct value !!!

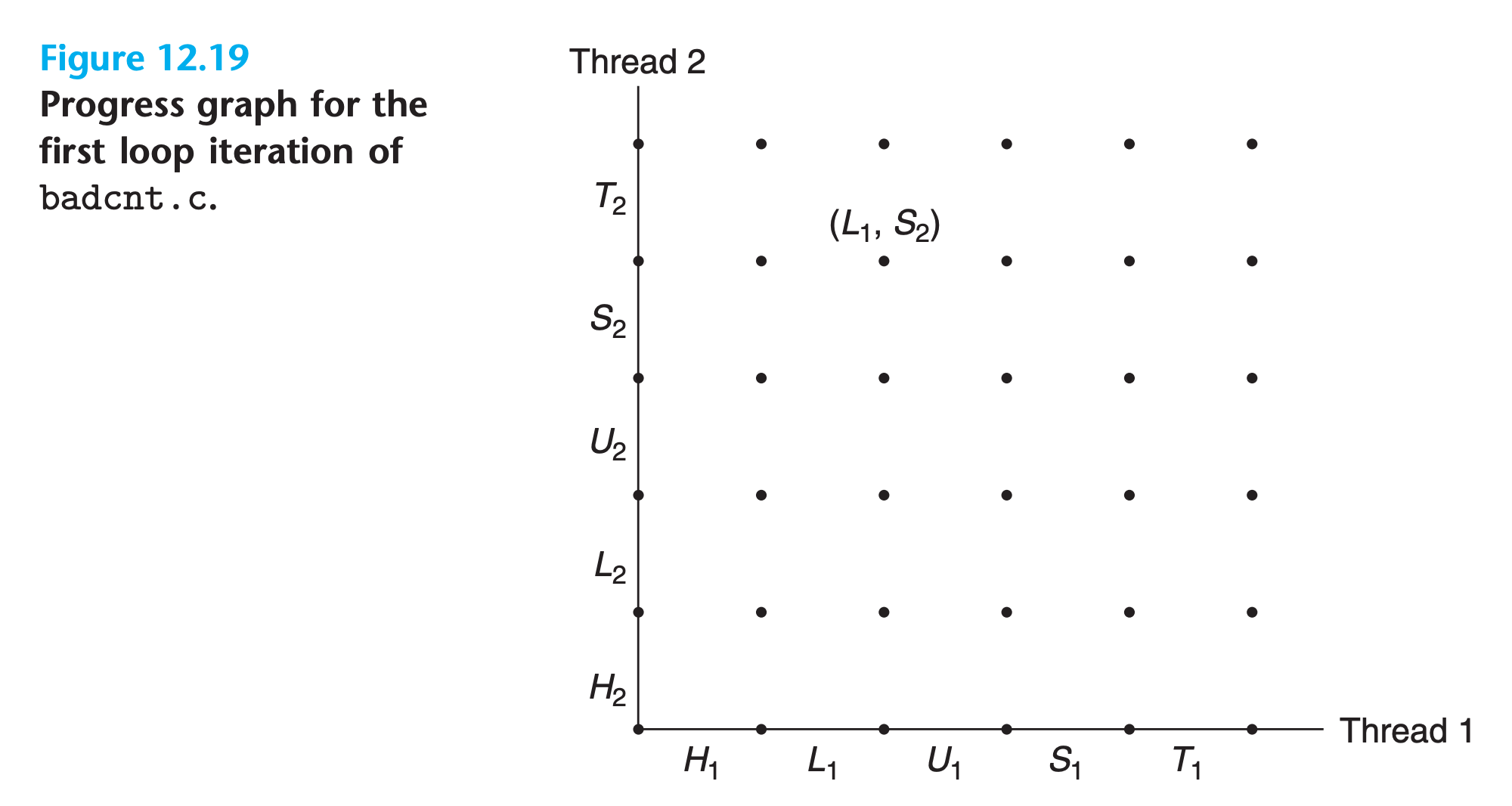

12.5.1 Progress Graphs

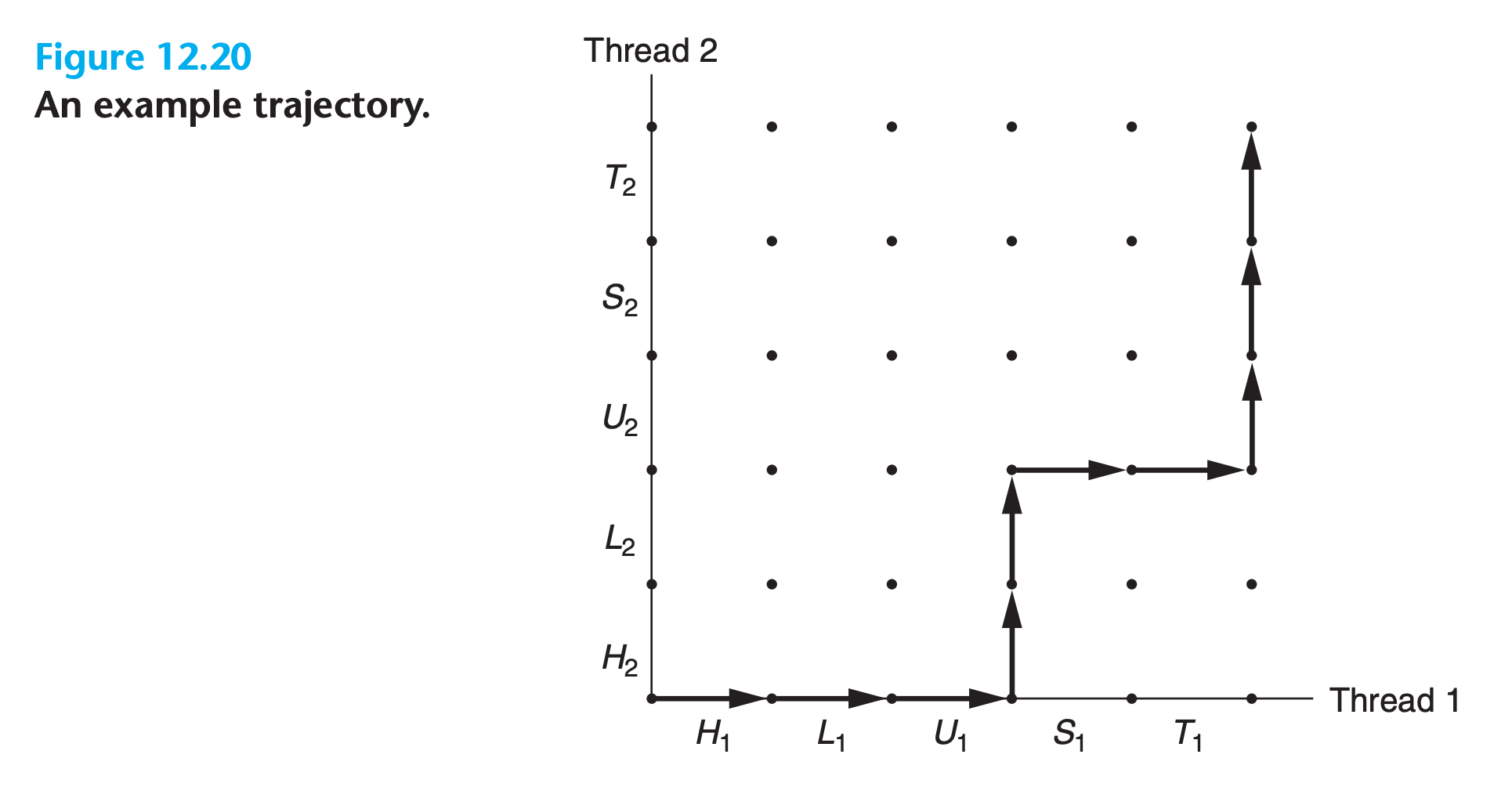

A progress graph models the execution of n concurrent threads as a trajectory through an n-dimensional Cartesian space.

(Multiprocessors behave in ways that cannot be explained by progress graphs.)

- A progress graph models instruction execution as a transition from one state to another. A transition is represented as a directed edge from one point to an adjacent point.

- Legal transitions move to the right or up. Two instructions cannot complete at the same time—diagonal transitions are not allowed. Programs never run backward so transitions that move down or to the left are not legal either.

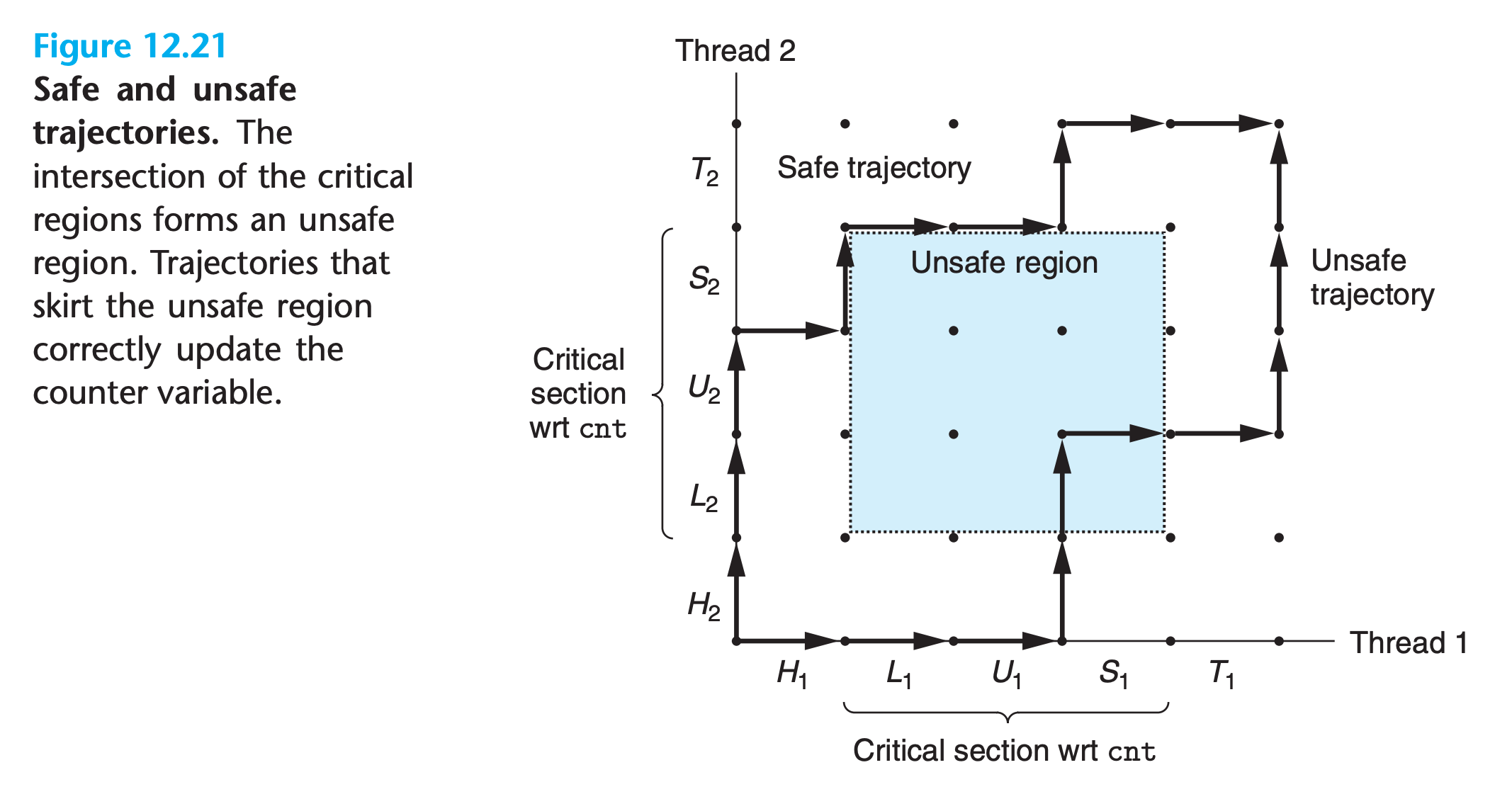

For thread i, the instructions (Li, Ui, Si) that manipulate the contents of the shared variable cnt constitute a critical section that should not be interleaved with the critical section of the other thread.

In other words, we want to ensure that each thread has mutually exclusive access to the shared variable while it is executing the instructions in its critical section

12.5.2 Semaphores

A semaphore, s, is a global variable with a nonnegative integer value that can only be manipulated by two special operations, called P and V

- P(s)

- If s is nonzero, then P decrements s and returns immediately.

- If s is zero, then suspend the thread until s becomes nonzero and the thread is restarted by a V operation.

- After restarting, the P operation decrements s and returns control to the caller.

- V(s)

- The V operation incrementss by 1.

- If there are any threads blocked at a P operation waiting for s to become nonzero, then the V operation restarts exactly one of these threads, which then completes its P operation by decrementing s.

- When several threads are waiting at a semaphore, you cannot predict which one will be restarted as a result of the V

- Both P and V operation is indivisible, that is to say that they cannot be interrupted.

- The definitions of P and V ensure that a running program can never enter a state where a properly initialized semaphore has a negative value

1 |

|

- The sem_init function initializes semaphore sem to value. Each semaphore must be initialized before it can be used.

s means the number of available resource of this type. P(s) means lock, saying that 1 resource is used, any other request of this resource should by blocked. V(s) means unlock, saying that 1 resource is released, request of this resource is available

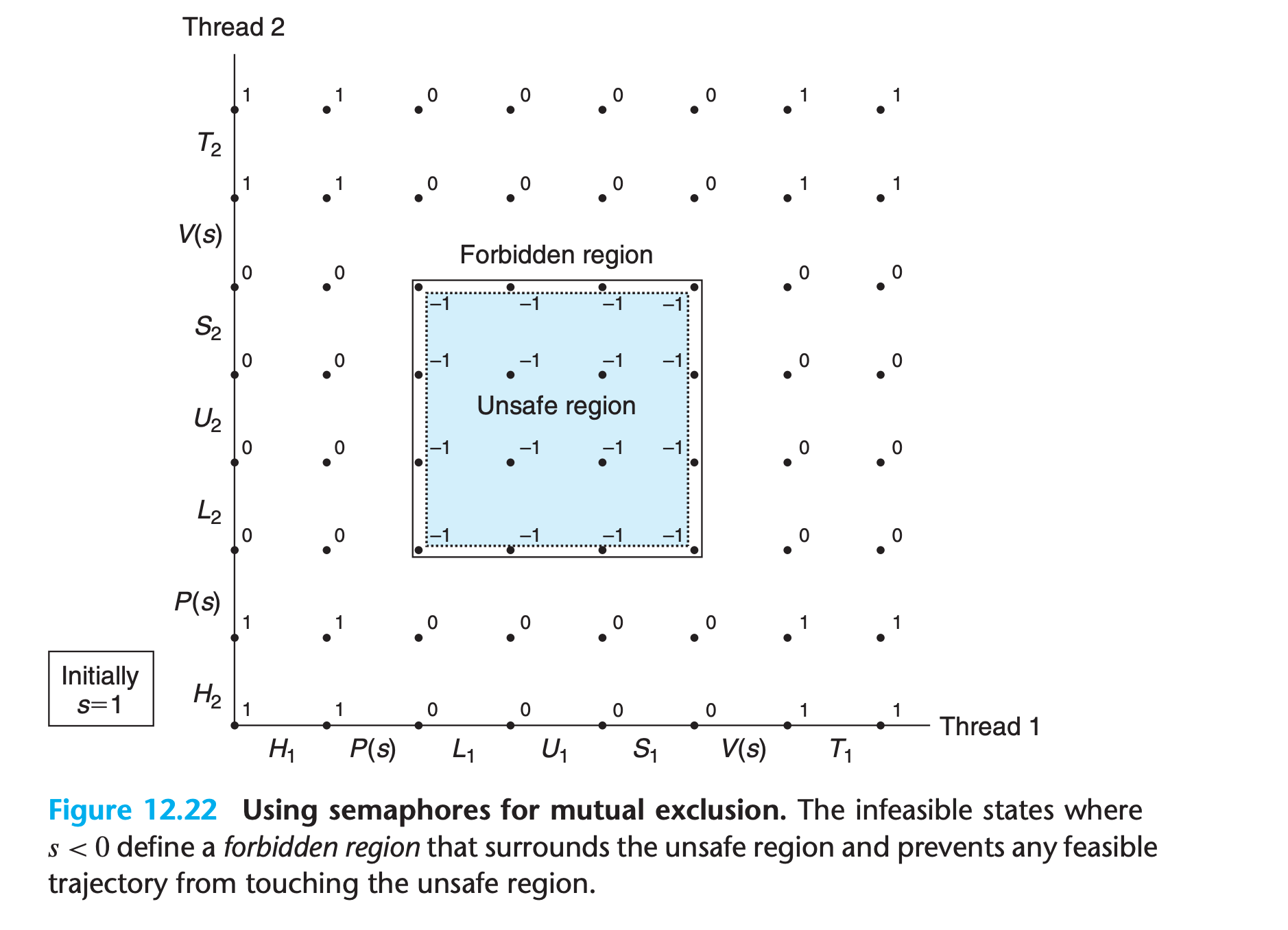

12.5.3 Using Semaphores for Mutual Exclusion

The basic idea is to associate a semaphore s, initially 1, with each shared variable (or related set of shared variables) and then surround the corresponding critical section with P(s) and V (s) operations.

A semaphore that is used in this way to protect shared variables is called a binary semaphore because its value is always 0 or 1

Binary semaphores whose purpose is to provide mutual exclusion are often called mutexes.

Performing a P operation on a mutex is called locking the mutex.

Similarly, performing the V operation is called unlocking the mutex.

Combination of P and V operations creates a collection of states, called a forbidden region, where s < 0.

(-1 is not allowed means that there are only 1 shared resource of this kind, threads cannot access it simultaneously !)

1 | volatile long cnt = 0; /* Counter */ |

12.5.4 Using Semaphores to Schedule Shared Resources

schedule accesses to shared resources : a thread uses a semaphore operation to notify another thread that some condition in the program state has become true

Producer-Consumer Problem

(For example, in GUI mode, user inputs are viewed as producer, handlers are viewed as consumers)

Readers-Writers Problem

Writers must have exclusive access to the object, but readers may share the object with an unlimited number of other readers

12.5.5 Putting It Together: A Concurrent Server Based on Prethreading

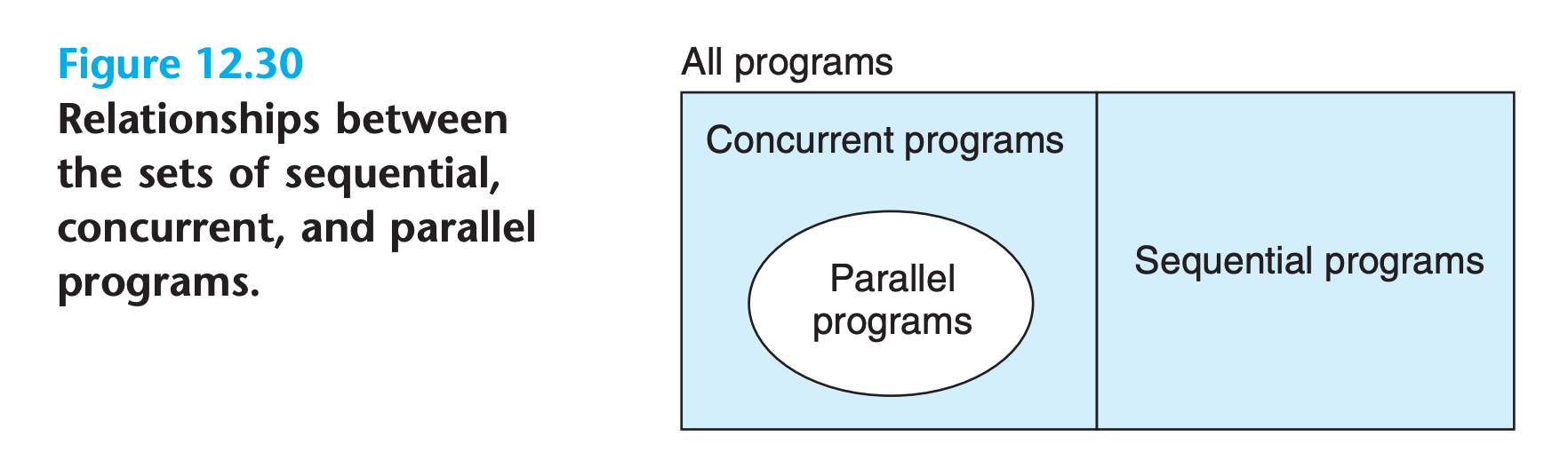

12.6 Using Threads for Parallelism

Operating system kernel schedules the concurrent threads in parallel on multiple cores, rather than sequentially on a single core

- A parallel program is a concurrent program running on multiple processors.

- Synchronization overhead is expensive and should be avoided if possible. If it cannot be avoided, the overhead should be amortized by as much useful computation as possible.(This is why some sequential program will run faster than parallel programs)

Characterizing the Performance of Parallel Programs

12.7 Other Concurrency Issues

- Don’t use thread-unsafe function in your multi-thread program

- All standard C library functions are thread-safe, such as

malloc,free,printf,scanf….. - Beware of deadlock(program wait for some condition which will never happen)

12.8 Summary

Thread-level parallelism

- Multi-thread program doesn’t mean fast program : lock and unlock operation can be really expensive

- Multi-thread program is hard to write, when you start the job, problems keep upbringing

- Even with a good idea and a lot of work, the performance may not improve.

- Before you start coding, parallelization strategy is super important(How your program parallelize?)